Vector search finds relevant code but misses the blast radius. Learn how combining lightweight code graphs with RAG creates cross-repository context that makes code changes across 50+ repositories predictable—without heavy graph infrastructure.

Every engineer working in a real enterprise codebase knows this pattern. You search for code across fifty repositories. You open ten browser tabs. You scan twenty functions. Then you realize the place where the actual change needs to happen never appeared in your results.

The problem is not search. The problem is context and blast radius.

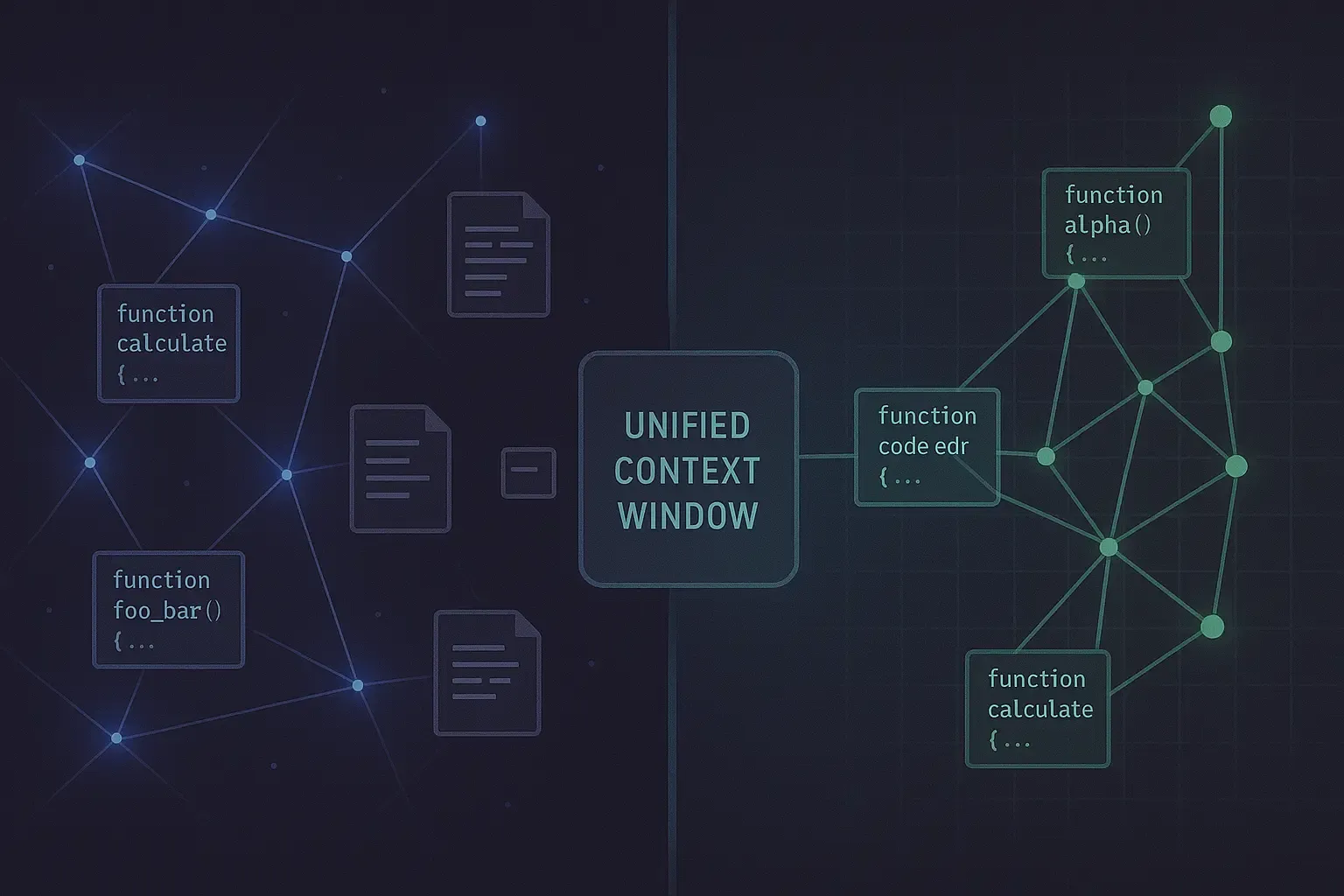

Over the past few months, we developed an approach that combines Retrieval Augmented Generation with a lightweight code graph. This is not a typical graph database story. We did not use Neo4j. We did not build a massive knowledge graph. Instead, we built something that behaves like a neighbourhood signal complementing vector search.

This combination solves the recall problem without turning the system into a research project.

We store around 500,000 code files spanning more than 50 repositories. Each file is chunked carefully and embedded into Pinecone. Each file also carries rich metadata that an LLM generates during ingestion. This gives us strong initial retrieval. When a developer asks a question, our Pinecone index returns the top relevant chunks with impressive precision.

But Pinecone alone does not understand software structure.

It cannot know that one function is called from another file. It cannot know that a shared library change requires synchronized changes in five other repositories. It cannot know that a small protocol change in a core repo will break tests in a different service.

No embedding model will magically recover those relationships unless you explicitly encode them.

This is where a lightweight code graph becomes a necessary second signal.

Most teams assume that adding a graph means introducing heavy infrastructure. In reality, you only need two simple collections.

The nodes collection represents files or symbols. Each node holds:

The edges collection represents relationships:

calls — function A invokes function Bimports — file A imports module Bused_by — symbol A is referenced by symbol Btest_touches_code — test file A covers function Bconfig_touches_module — config A affects service BThis graph sits in a relational store or document store. You never need complex graph queries at scale. You only need fast lookups by node ID and repository.

The graph is built offline using tree-sitter for static analysis. It is cheap to maintain and easy to recompute when code changes.

The pipeline runs in four stages that progressively build complete cross-repository context.

For any developer query, we ask Pinecone for the top K chunks based on semantic similarity. We map those chunks back to their file-level nodes in the graph.

This gives us the nucleus of relevant code—the files that semantically match what the developer is asking about.

For each node returned by Pinecone, we traverse one or two hops around the graph. We fetch:

We keep this expansion deliberately small. The goal is completeness, not comprehensiveness. We want the immediate blast radius, not every transitive dependency in the codebase.

We now have two candidate sets: the Pinecone results and the graph expansion results.

We pull embeddings for the expanded set and rerank everything using a secondary scoring rule that considers both semantic similarity and structural proximity. A file that is semantically distant but structurally adjacent (one hop away in the call graph) gets boosted. A file that is semantically similar but structurally isolated gets weighted accordingly.

This produces a more complete picture of what files actually matter for the query.

This is where the system prevents the most common multi-repo failures.

Shared library protection: If the query touches a shared library, the system forces at least one representative caller from each downstream repository to appear in the final context. This prevents the model from suggesting a breaking change that ignores half the call sites.

Protocol change detection: If the query involves API contracts, serialization, or cross-service communication, the system forces relevant config files and integration tests to appear as well.

These simple rules save many downstream errors. The LLM cannot suggest a change that breaks other services if those services are visible in its context window.

Once context is collected, the LLM writes the final answer or generates patches with full awareness of the blast radius.

A pure graph approach fails because graph relationships do not capture intent or semantics. Two functions might be structurally connected but completely irrelevant to the current query.

A pure embedding approach fails because embeddings cannot see the ripple effect of code changes. Two files might be semantically similar but have no structural relationship that would require coordinated changes.

When you combine both signals, you get the best of both worlds.

The vector stage picks the nucleus of the answer—the code that semantically matches the query. The graph stage ensures that nucleus is surrounded by every important neighbour—the code that would be affected by changes. The reranking step cleans up noise from both sources.

The final answer becomes reliable because the essential files are present.

This setup also scales extremely well. The graph is lightweight. The lookups are narrow and fast. The expensive computational work sits where it belongs: inside the vector index.

When a query represents a true multi-repository change, you can improve accuracy further by introducing a planning layer before retrieval.

Before running any search, let the model plan what changes it expects to make. The plan might look like:

Once you have this plan, you run separate targeted retrievals for each planned change area. Each retrieval uses the Graph RAG pipeline independently, ensuring that every area of the codebase gets its own focused context window.

This planning-before-retrieval approach increases recall dramatically for distributed systems where changes naturally span multiple services.

Graph RAG is not a fancy research term. It is a practical combination of two signals that were never meant to compete.

Vector search gives you relevance—finding code that semantically matches what you are asking about.

The code graph gives you structure—understanding how that code connects to everything else.

Put them together and you finally get context that spans actual codebases rather than isolated files.

If you work in any modern engineering team, you already know the cost of missing context. A change that touches one repository often needs coordinated changes in five more. Without a graph, you miss the dependencies. Without embeddings, you do not know where to begin.

The right balance makes the whole system feel obvious in retrospect.

This approach is how we now think about building cross-repository context for enterprise engineering teams. It is the first time code changes across fifty repositories finally feel predictable.

The key insights:

You do not need heavy graph infrastructure. Two simple collections with node and edge data, built from static analysis, are sufficient for neighbourhood expansion.

Vector search and graph traversal are complementary, not competing. Use embeddings for semantic relevance. Use the graph for structural completeness. Rerank to combine both signals.

Safety checks prevent the worst failures. Forcing downstream callers and affected tests into context stops the model from suggesting changes that break other services.

Planning improves complex queries. For multi-repository changes, let the model plan first, then retrieve context for each planned change area separately.

The result is a RAG system that understands code the way engineers understand code—not as isolated files, but as interconnected systems where changes ripple across boundaries.

ByteBell provides cross-repository context for enterprise engineering teams. Our version-aware knowledge graphs combine semantic search with structural understanding to make code changes across 50+ repositories reliable. Every answer includes citations to exact files, line numbers, and commits. Learn more at bytebell.com.